or why algorithmic design has political implications

Text von Orestis Papakyriakopoulos und Andreas Lingg

The consolidation of the Internet as our main network of communication, the development of hardware with increased computational efficiency, the invention of the smartphone, and the ability to project human behaviour into numbers created new technological applications that impact all aspects of society. Algorithmic decision-making systems, forms of Artificial Intelligence, online social networking platforms, data sharing systems and predictive tools have invaded everyday life, leading to the transformation of human behaviour, socialization, economic markets, and political conduct.

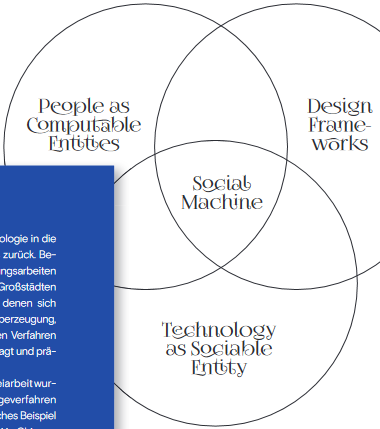

Algorithms, society, and communication are coupled in complex and continuous ways, generating new forms of socio-algorithmic ecosystems. The founder of the World Wide Web, Tim Berners-Lee, defi ned such ecosystems in which individuals and algorithms participate and interact as social machines. Social machines are not actually machines, nor do they depict mechanistic phenomena. On the contrary, they are systems in which humans and algorithms are detached from their materiality, forming complex interaction patterns. Online social networks, algorithmic decision making (ADM) systems and search engines are all types of social machines, with individuals, software and hardware constantly interacting and resulting in emergent system states.

In social machines, computability is not an exclusive right of the machines, nor is sociability an exclusively human trait. For example, human behaviour is projected into metadata which is then fed into recommendation algorithms, deep learning models, or computer vision software. Similarly, the decision of an ADM system to hire or fi re an employee replaces the human resources manager in a company’s personnel network. What complements processes such as the above are the design frameworks that guide the behaviour of individuals and the application of technologies (figure 1). These design frameworks are the ones actually shaping how technology impacts society: The design principles of a credit system influence the behaviour of citizens, defining the peoples’ feasible action space and forming their social goals. Similarly, a social media recommendation system suggests content to its users in a way that aligns with the company’s financial goals, while a dating app’s algorithm shapes which societal groups will meet, interact, and form social ties.

ALGORITHMIC DESIGN MATTERS!

The interconnectedness of design and algorithmic impact denotes that any unwanted social outcomes caused by new technologies should be seen as neither neutral, nor unavoidable. The creation of filter bubbles on social media, the virality of low-quality political content, the oversurveillance of selected areas based on predictive policing algorithms are some examples of known feedback loops in social machines. These loops exist because algorithms make inferences based on repetitious information, oftentimes leading to algorithmic self-fulfilling prophecies. Equally important, they exist because the specific algorithmic outcomes suit the objectives of those deploying and implementing the algorithmic systems in question. Lack of variability in information distributed on online platforms or targeted patrolling of specific neighbourhoods are all consequences of algorithmic design. Consequently, any algorithmic outcome is potentially valueladen, and any attempt to assess algorithmic impact starts by assessing the creators’ design decisions.

Since most algorithmic applications are deployed by private companies, their design is always dependent on the owners’ perception of what is considered ethical, what is necessary for achieving their goals, and what is allowed by the state. The fact that companies do not disclose how their systems work, maintaining a high level of opacity in every aspect of the models’ development and deployment, impedes the understanding of the systems and determines the relations of accountability and transparency between the state, users, and systems owners. Especially in cases of auditing algorithms and trying to trace their potential discriminatory impact or political infl uence, such design properties obstruct researchers and policy makers from interpreting phenomena and appropriate societal governance.

IN PURSUIT OF CIVIC MACHINES

The above issues raise questions about how to ideally design social machines that serve the society in an optimal way. Keeping in mind that technology should serve individuals and the society in a way that ensures equality, justice, political freedom and social inclusiveness, researchers and policy makers should define principles, frameworks, and constraints that can lead to the creation of socio-algorithmic ecosystems that serve the public interest.

Putting such an ideal into practice is challenging. The good news is that it is in the self-interest of platform operators or providers of data-driven forecasting tools to repeatedly self-interrupt the recursive algorithmic narrowing of possibilities. Filter bubbles, ingrained referral, and solution procedures, etc. can only be functional up to a certain point: There is a constantly growing danger that the non-observed excluded world will in some way become a problem for the model.

This can take various forms. In the entertainment and leisure sector, for example, users may find themselves missing variety and the excitement that it brings. Recommendations and offers will then be perceived as boring and overly expectable. Of course, we crave repetition and routine – but its excessive pursuit and fulfi lment can start feeling stale and empty relatively quickly. Every now and then, an encounter with the unexpected is needed to refresh and revitalise the user experience.

The need for variety is less important in other areas, such as government and policing. But even there, challenges similarly arise from the self-aggravating dynamics of algorithmic processes. In the end, all these prediction models live in the past in some sense. The elements which they register and process as well as their modes of inference originate from past acts of thought and design. In this respect, the emergence of unknown and then unforeseeable actors, techniques, places, and trends can lead to an ever-widening gap between algorithmic forecasts and projections on the one hand, and reality on the other.

The bad news is that remedying these computer-based narrowing tendencies – for example, by regularly updating the programs or by incorporating random generators to regularly extract divergent options – only does the bare minimum. In the end, not only technological but also organizational and economic reasons play a role here. It may take a very long time for algorithmically operated exclusions, simplifications, stereotypes or forms of discrimination to become observable by their operators. And it can take an even longer time before the revision costs are fi nally accepted.For public authorities, such delays are potentially legitimacy-threatening; for private-sector companies, they can be survival-relevant. Regular examination and adjustment of algorithmic decision-making systems is, therefore, not only an abstract moral imperative, but also a pragmatic one. Inadequate design frameworks that guide the behaviour of individuals and the application of technologies not only potentially damage the claims and rights of third parties, they also endanger the operators themselves through their inappropriate exclusions and selections. The concept of Civic Machines addresses the socio-political dimensions of this problem. Civic Machines are oriented to the fact that the world is in flux and that algorithmic options come from the past – and that rigid structures and ways of thinking thus inevitably lead to states of tension, which can be a burden both to the executing institution and the surrounding society, its standards of inclusion, equality, and justice. Civic Machines are systems that systematically give the Other a chance, cultivate openness and the ability to learn in anticipation of futures which will inevitably arrive.

Lingg und Papakyriakopoulos verbindet schon lange ein gemeinsames Lern- und Forschungsinteresse. Für unser Themenheft wurde dieses Zusammendenken für einen kontinenteübergreifenden Beitrag aktiviert. Die spannende Verbindung zu Orestis P., der heute an der Princeton University forscht, wollen wir gerne für das WittenLab weiter ausbauen.

Sasha Costanza-Chock: Design justice. Community-led practices to build the worlds we need. Cambridge (MA)/London: MIT Press 2020

Catherine D’Ignazio/Lauren F. Klein: Data feminism. Cambridge (MA)/London: MIT Press 2020

Dr. Orestis Papakyriakopoulos is a postdoctoral research associate at the Center for Information Technology Policy at Princeton University. His research showcases political issues and provides ideas, frameworks, and practical solutions towards just, inclusive and participatory socio-algorithmic ecosystems through the application of dataintensive algorithms and social theories.

Currently he is preparing an online political transparency dashboard for the 2021 German Federal Elections – stay tuned!

Dr. Andreas Lingg is a research associate at the Senior Professorship for Economics and Philosophy at the UW/H. He conducts research at the interface of economic history, the history of economic thought, and economic philosophy.